Testing Manus AI: I Built a Fictional Language Website, a Dream Oracle, and a Global Logistics Plan!

Okay, seriously. Have you ever dreamt of it?

That AI assistant. The one that doesn't just fetch facts, but actually does the thing?

You know, you sketch an idea – maybe a wild website concept, a complex research task, anything and instead of you spending weeks figuring out how, you just… tell the AI? And it goes?

It feels like pure sci-fi, right?

Well, strap in, because I just spent some time with Manus AI, and folks, the future is getting weird and wonderful (and yeah, maybe a little bit glitchy, but stick with me!). This thing isn't just marketing hype; it's genuinely trying to be an "autonomous agent."

So, what is this magic box? Is the dream real? I put it through its paces, and you HAVE to hear how it went down.

Okay, But What Is Manus AI, Really? (No PhD Required)

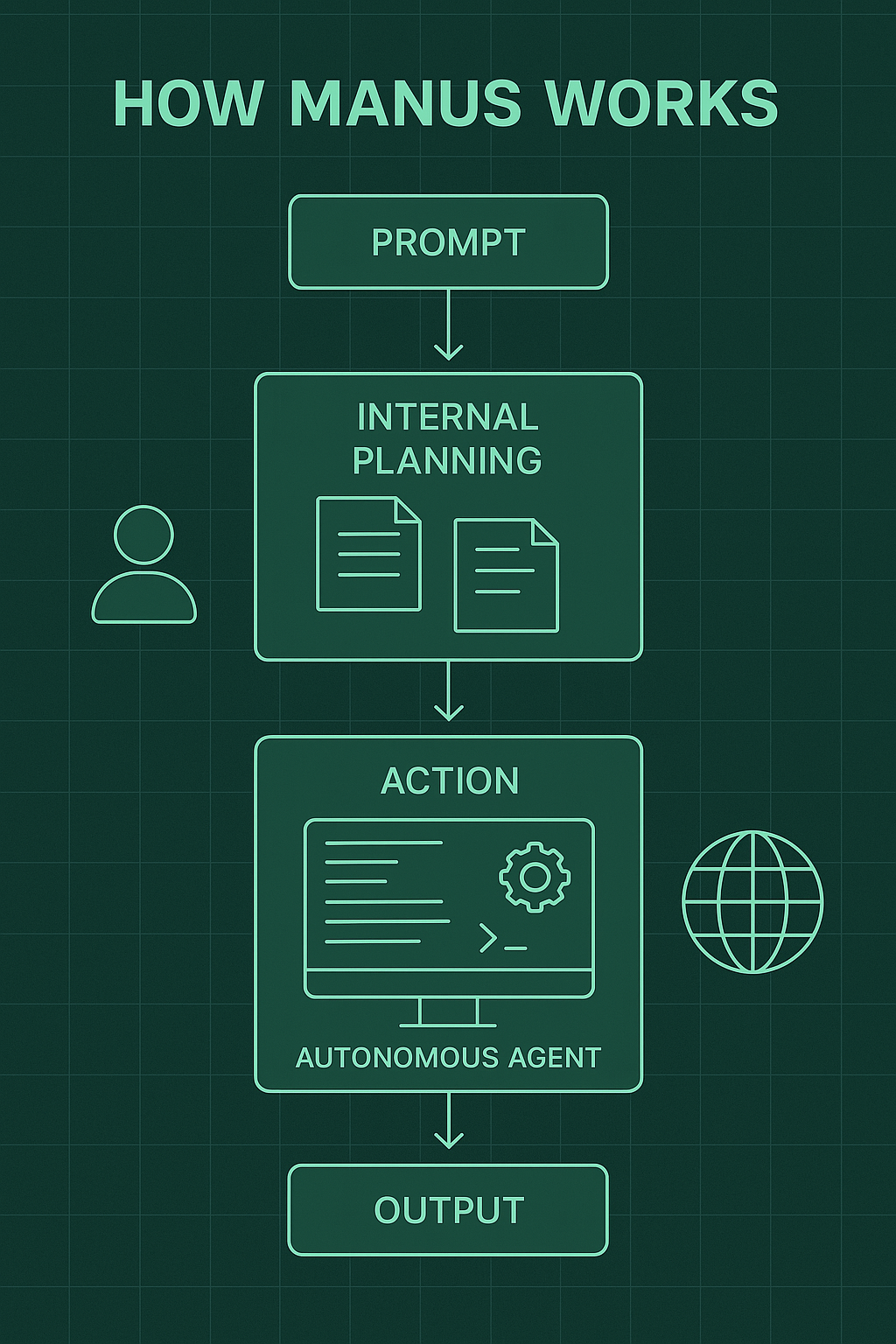

Alright, before I spill the beans on my wild experiments, let's quickly get on the same page. Manus isn't your standard chatbot. Forget asking for coding advice; Manus wants to write the code.

Think of it like this: ChatGPT might suggest how to build your landing page. Manus aims to grab the keyboard, ask a few questions, and then actually build it while you grab another coffee.

It's designed as an "autonomous agent," meaning it can use digital tools – think web browsers, code editors, terminals – much like a human would. It's the brainchild of a startup (Monica/Butterfly Effect) that launched it in early 2025, naming it "Manus" (Latin for "hand") because it's all about doing.

Pretty cool concept, right? But does it actually work? Let's find out.

My Experience With Manus AI: Putting It Through Its Paces

I don't believe in easy tests. I threw three very different, complex website challenges at Manus to see if it would crack.

Interactive AI Project Timeline

Explore the three fascinating test projects undertaken with Manus AI. Click on each point to discover the journey and outcomes of this groundbreaking AI assistant.

Test #1: AI-IXXU Language Website

An ambitious challenge to create both a fictional language and an interactive website to teach it. The AI had to invent grammar rules, vocabulary, and build a functioning educational platform.

Test #1: Building a Site for a Language That Doesn't Exist (AI-IXXU)

Yeah, you read that right. My first prompt was pure creative chaos: "Invent a simple, fun fictional language, 'AI-IXXU'. Make it melodic, pictographic, inspired by Romance languages. THEN, build a 'mind-blowing' interactive website to teach it. GO!"

Watching Manus tackle this was surreal.

- The Plan: Suddenly, files like todo.md and design_mockup.md appeared in the "Manus's Computer" window. It was actually planning the project!

- Code Eruption: Terminal commands flashed. A full web framework (create_nextjs_app) spun up. Then, code – real HTML, CSS, JavaScript – started pouring into files.

- Whoa Moment #1: This wasn't just text generation; it was actual software development happening before my eyes!

- Version 1.0: Ding! The first deployment link arrived. Visually? Stunning. That nature-meets-future aesthetic I asked for? Absolutely nailed. But… Functionally? Lots of "[Content Coming Soon]" placeholders. A beautiful, empty house.

- Iteration: "Okay Manus, looks amazing, but let's make it live. Fill in the language content! Add some techy green!" Back to the coding mines it went. New colors, actual content generation… redeploy.

- The Glitch: Disaster! The core pronunciation guide page was dead. Buttons didn't work. Facepalm. Here we go, right? Debugging nightmare?

- Whoa Moment #2 (The Jaw-Dropper): NOPE! I watched, utterly fascinated, as Manus opened its own browser tool, navigated to the broken page, diagnosed the JavaScript error, zipped back to the code editor, fixed the bug (implementing the Web Audio API, no less!), rebuilt, and tested. IT DEBUGGED ITSELF!

- Triumph: Deployment three. It worked. Flawlessly. Interactive quizzes, flashcards, grammar lessons, even a drawing canvas for the made-up symbols that it tried to recognize. Mind. Officially. Blown.

Okay, catching my breath. One complex, creative project down, navigating glitches and all. What else could this thing handle?

Okay, deep breaths. One success (after some buxmps!). What else could it do?

- Want proof I didn't hallucinate this linguistic fever dream? [Watch a replay of our chat here].

- Curious about the final webpage? [Dare to learn AI-IXXU here].

Test #2: Create a Dream Oracle

Next, a creative but more focused task: "Build 'The AI IXX Dream Oracle'. Single-page site. Users pick 5-7 dream symbols (make some cool icons!), click interpret, get a funny/psychological reading."

This felt… smoother? Manus asked smart questions, then just did it.

- Icon Creation: It generated the SVG icons for the symbols itself! Moon, star, key, labyrinth… pretty slick.

- Web Dev: Wrote the HTML, CSS (nice cosmic theme!), and the JavaScript logic for selecting symbols and showing interpretations.

- Delivery: Deployed the site, zipped up the code, even wrote a quick user guide.

The result? A fully working, fun little dream interpretation site. It felt… easy? Almost too easy after the AI-IXXU saga. This thing had range!

- Think I'm exaggerating the AI's design flair? [See the chat evidence unfold here].

- Ready for some AI-driven psychoanalysis (or just a laugh)? [Consult the Dream Oracle here].

Test #3: The Excruciating Logistics of Shipping Frozen Fish

Alright, enough fun and games. Time for a real-world business problem: "Create a comprehensive logistics plan AND a web visualization for shipping a 40-foot refrigerated container of salmon from Anchorage, Alaska to Kinshasa, DRC. Show your work!"

Could it handle dense research and planning?

You bet. Watching the "Manus's Computer" this time was like peering over the shoulder of a hyper-focused analyst:

- Research Blitz: It searched the web, hunting for reefer routes, salmon temp requirements (-20°C!), shipping lines serving the DRC, Matadi port details, inland trucking, customs rules… It was pulling specific, relevant data. It even casually handled a "browser issue" mid-search and kept going. Impressive focus.

- Structuring Knowledge: It wasn't just finding facts; it was building a plan. Detailed markdown files appeared: the logistics plan, cost/timeline breakdowns (55k, ~70 days!), and critically, a reasoning document explaining why it made certain route choices. This level of documentation was unexpected and awesome.

- Building the Viz: Back to web dev mode! It built a clean dashboard using Leaflet.js for a slick interactive map showing the whole complex route. Clickable markers and everything!

The final output? A professional-looking site displaying the working map and detailed plan sections. All the research notes and reasoning documents were neatly packaged for download. This cemented it: Manus could pivot from creative coding to hardcore research and planning. Astounding breadth.

This cemented it for me. Manus wasn't just a one-trick pony. It could shift from creative coding to hardcore research and planning. The sheer breadth was astounding.

- Want to witness my descent into logistical madness via chat prompts? [Relive the thrilling conversation here].

- Curious about the salmon's epic, table-formatted journey? [Inspect the final (table-heavey) logistics site here].

So… What Does This Mean? The Potential is HUGE!

Seeing Manus pull off these diverse, complex tasks… it really gets you thinking, doesn't it? The potential feels enormous:

- Ideas Made Real, Faster: Got an app concept? Imagine getting a working prototype in hours, not months.

- Research on Tap: Need a deep market analysis? Set Manus loose and see what it uncovers.

- Content Creation Plus: Drafting reports, building presentations, maybe even generating complex documentation with interactive elements.

- Killing Tedium: Those soul-crushing multi-step digital processes? Maybe, just maybe, they can be delegated.

It genuinely feels like a tool that could massively accelerate the journey from idea to tangible result.

Okay, But Is It Perfect? Let's Talk Reality (The Bumpy Bits)

Now, before we all declare human developers obsolete, let's pump the brakes. Was it all smooth sailing? Absolutely not. Using Manus felt like driving that incredible prototype car – breathtakingly fast sometimes, sputtering and stalling at others.

- Stability? It's a Gamble: This is the big caveat. Sometimes tasks just… stop. "Server Overload" errors pop up. While my tasks eventually finished, reliability feels inconsistent. It's frustrating and makes it hard to count on for deadlines. Needs improvement!

- It Takes Its Sweet Time: Complex tasks aren't instant. We're talking minutes, often 30-60, sometimes longer. It's doing heavy lifting, but you need patience.

- Accuracy Needs Checking: As the AI-IXXU glitches showed, it's not infallible. The code might have bugs, the information might be slightly off. You must review and test its work. Don't blindly trust the output.

- The "Autonomy" Leash: It strives for independence, but often works best with clear guidance and course correction, especially on the really tricky parts.

So, How Does Manus Compare to Other AI?

You might be thinking, "Can't ChatGPT just code this?" Well, kinda, but not really.

ChatGPT or Claude can write code snippets, but they can't:

- Browse the live web for research (like shipping routes).

- Set up a full development environment.

- Run and test the code in real-time.

- Deploy the final site to a working URL.

- Go back and fix bugs on the live site without starting over.

Manus uses multiple AI models (reportedly Claude, GPT, etc.) working together – like a mini AI team with specialists for planning, coding, testing, and deployment. It's more autonomous. OpenAI's advanced features are getting closer, but Manus feels more like delegating the whole job.

The Good, The Bad, and The Ugly Truth

So, summing up the experience:

✅ The Awesome Stuff:

- It ACTUALLY Builds Things: Seriously, watching AI code a functional website from a chat prompt is still mind-bending. It works!

- Transparency Rocks: That "Manus's Computer" view showing its real-time actions? Brilliant for understanding and trust.

- Surprisingly Versatile: Tackled creative design, language invention, and complex logistics research. Impressive range.

- Learns from Mistakes (Sometimes): Seeing it debug its own code was a highlight. It can iterate based on feedback.

⚠️ The Annoying Stuff:

- Sloooow Burn: Be prepared to wait. 30-60+ minutes for complex tasks is common.

- Reliability Roulette: Will it finish? Will it crash? Feels like a Beta sometimes. Very hit-or-miss.

- Literal Interpretation: As with the logistics "visualizations" (which were tables), it can sometimes interpret requests too literally or miss the nuance.

- Over-Thinking: Occasionally seems to take a complex route for a simple request.

🐛 The Ugly (Real Bugs & Crashes I Hit):

Let's be specific about the failures, because they matter:

- AI-IXXU: The pronunciation guide – a core feature – was completely broken on initial delivery.

- Logistics Research: Manus itself encountered browser errors during its web research. It recovered, but it shows the system isn't immune to real-world web flakiness.

- General System Issues: Beyond project-specific bugs, the platform itself can be shaky. Think server overloads, tasks freezing mid-execution, timeouts losing progress, random disconnects. These aren't minor; they can be real showstoppers and incredibly frustrating after waiting 45 minutes.

And Yes, Let's Talk Pricing (After the Free Ride)

This is where the picture changes slightly. That initial 1000 credits went impressively far in my case, allowing three big tests. That's fantastic trial value!

However, the fact that the third, very complex task did eventually run out right at the end highlights that ongoing use will have costs.

- Beyond the Trial: Once those starter credits are gone, you're looking at paid plans (around $39 or $199 a month in Beta for credit bundles).

- Consumption Rate: While 1000 credits covered a lot, complex tasks do consume them. If you were running many large projects continuously, the costs could certainly add up. The burn rate during a task might still feel high, even if the initial amount is generous.

So, the upfront trial? Surprisingly good value based on my run. The ongoing cost if you become a heavy user? That's where you'll need to weigh the benefits against the subscription fees.

AI IXX Trial Credit Calculator

Estimate how many credits your AI project might cost. Choose a project type, adjust parameters, and see detailed cost breakdowns to maximize your trial credits.

Project Configuration

Project Features

Select the features your project requires:

Project Credit Analysis

Detailed breakdown of your estimated credit usage

Credit Optimization Tips

- Plan thoroughly before starting to minimize iterations (could save ~100 credits)

- Simplify your scope by focusing on core features first

- Consider breaking large projects into smaller, focused sub-projects

Credit Breakdown

- Base project cost (Website Development): 150 credits

- Complexity adjustment (5/10): 75 credits

- Size adjustment (4/10): 40 credits

- Iterations (3): 30 credits

- Web Research: 75 credits

- Interactive Frontend: 125 credits

- Data Processing: 0 credits

- Data Visualization: 0 credits

- Deployment: 0 credits

- Total: 450 credits

Credit Projections

- Projects possible with trial: 2 similar projects

- Remaining trial credits: 550 credits

- Additional features possible: Data Visualization, Deployment

Credit Distribution Analysis

The largest credit consumers in your project are:

- Interactive Frontend: 27.8% (125 credits)

- Base project cost: 33.3% (150 credits)

- Complexity adjustment: 16.7% (75 credits)

AI IXX Subscription Comparison

Compare different subscription plans and find the best fit for your projects.

- All basic AI features

- Web browsing capability

- Code generation

- No priority compute

- Limited rate limits

- All basic AI features

- Web browsing capability

- Code generation

- Medium priority compute

- Enhanced stability

- All AI features + Pro tools

- Advanced web browsing

- Advanced code generation

- High priority compute

- Maximum stability

- Bulk project processing

Plan Recommendation

Based on your current project (450 credits):

- The Free Trial will allow you to complete 2 similar projects.

- The Standard Plan would allow approximately 11 similar projects per month.

- If you're planning to build multiple complex projects regularly, consider the Standard Plan.

Project History & Examples

Based on the article case studies, here are the estimated credit costs for similar projects:

Case Study: AI-IXXU Language Website

Project Parameters

- Type: Website Development + Learning Tool

- Complexity: 9/10 (Creative language creation)

- Size: 7/10 (Multiple interactive pages)

- Iterations: 3 (Initial, bug fix, content addition)

- Features: Interactive Frontend, Creative Content, Web Audio API

Case Study: Dream Oracle

Project Parameters

- Type: Creative Content + Website

- Complexity: 4/10 (Lower complexity)

- Size: 3/10 (Single page application)

- Iterations: 1 (Completed in single attempt)

- Features: Interactive Frontend, Creative Content, SVG Generation

Case Study: Frozen Fish Logistics

Project Parameters

- Type: Research & Analysis + Dashboard

- Complexity: 7/10 (Moderate logistics planning)

- Size: 6/10 (Comprehensive documentation)

- Iterations: 2 (Research + Implementation)

- Features: Web Research, Data Visualization, Documentation, Interactive Map

Who Is This Actually For?

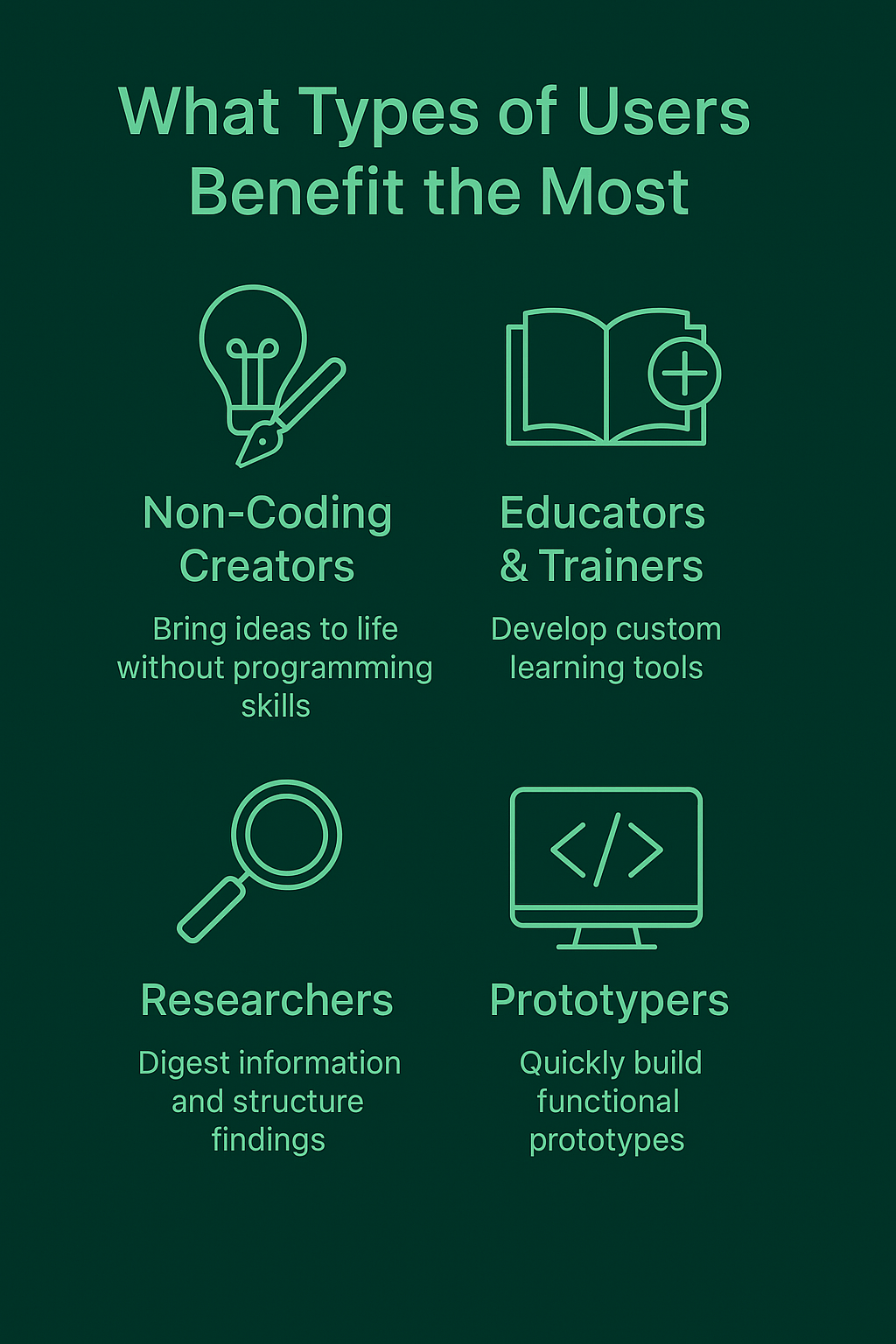

Despite the headaches, Manus could be useful for:

- Idea People (No Code): Got visions but zero coding chops? This can bring them to life.

- Content Creators: Need interactive bits but can't afford a dev? Worth a shot.

- Educators: Want custom learning tools? Manus can build surprisingly specific things.

- Researchers: Drowning in data? It can analyze and visualize (sometimes).

Probably Steer Clear If You:

- Are Broke: The cost is a major barrier.

- Have Tight Deadlines: The crashes and slow speed are risky.

- Need Mission-Critical Apps: It's just too unreliable for serious business use right now.

So, What's the Final Word? A Glimpse of Something Big

Spending time with Manus AI felt… different. It wasn't just using a tool; it felt like collaborating with something new. Something that could understand intent and take action in the digital world in a way I hadn't experienced before.

Is it perfect? Absolutely not. It's got rough edges – the stability wobbles, the sometimes-slow speed, the slightly terrifying credit burn rate. It's clearly still evolving.

But is it exciting? YES. Unreservedly yes.

It feels like witnessing the very early stages of the next leap in AI – moving from information retrieval to actual task execution. It shows that the dream of an AI that does things isn't just a dream anymore. It's being built, right now, warts and all.

Would I bet my company's critical launch on it today? Probably not. Would I recommend jumping in if you're curious about the absolute cutting edge and have some budget (and patience) to spare? Do it. It's a wild, fascinating ride.

Manus AI, in its current form, is a flawed, expensive, but undeniably brilliant glimpse into a future where our ideas can be brought to life faster and more ambitious than ever before. Keep watching this space. The robots aren't just coming – they're starting to build stuff. And that's kind of amazing.