OpenAI Finally Launches Real-Time Video Capabilities for ChatGPT After Months of Delays

SAN FRANCISCO, CA – After a nearly seven-month wait, OpenAI has finally released the real-time video capabilities for ChatGPT that it first demonstrated earlier this year. The company announced the long-awaited feature during a livestream on Thursday, confirming that its "Advanced Voice Mode,” a human-like conversational interface, is now equipped with vision.

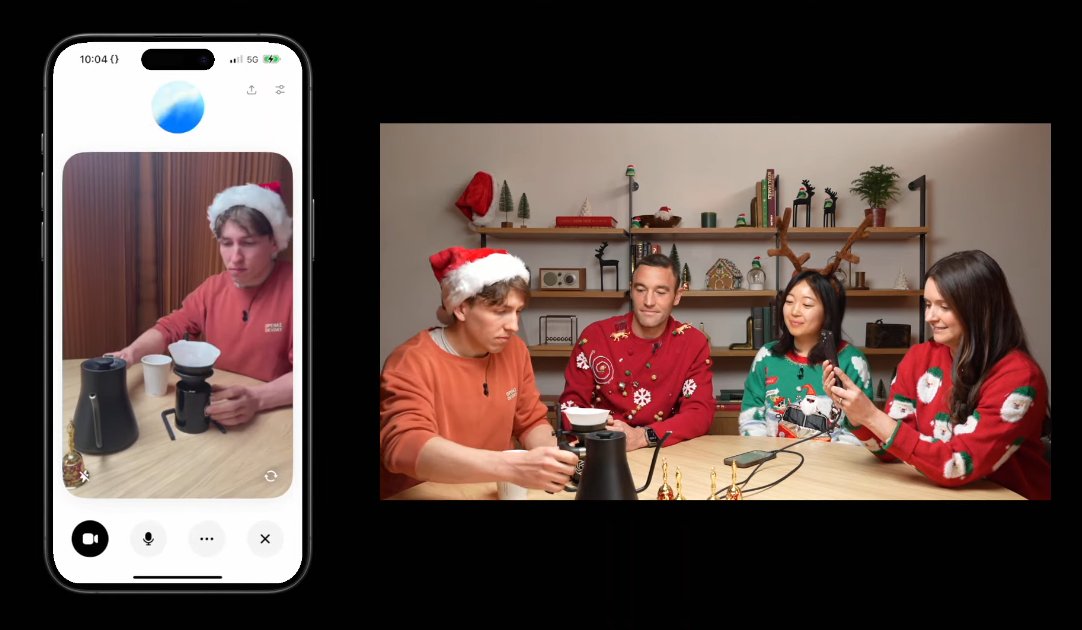

Subscribers to ChatGPT Plus, Team, and Pro can now use the ChatGPT app to point their smartphones at objects and receive near-instantaneous responses from the AI. This allows for dynamic interaction with the physical world, turning ChatGPT into a truly visual assistant.

- Real-time object recognition through smartphone cameras

- Screen sharing capability for technical assistance

- Integration with existing voice interface

- New "Santa Mode" voice preset option available

- Demonstrated on CNN's 60 Minutes with Greg Brockman

- Known limitation: Potential for AI "hallucinations"

- Activation through video icon in chat interface

Beyond the physical realm, Advanced Voice Mode with vision also extends to understanding what’s displayed on a device’s screen. Users can share their screen with the AI, enabling ChatGPT to explain complex settings menus, offer guidance on technical issues, or even assist with math problems.

To activate this new functionality, users can tap the voice icon next to the ChatGPT chat bar and then select the video icon at the bottom left of the screen. Screen sharing can be enabled by tapping the three-dot menu and selecting "Share Screen.”

The rollout of Advanced Voice Mode with vision began today and is expected to be completed within the next week. However, access is not universal. ChatGPT Enterprise and Edu subscribers will have to wait until January to gain access, and users in the EU, Switzerland, Iceland, Norway, and Liechtenstein face an indefinite delay, as OpenAI has not provided a timeline for its availability in those regions.

The new capabilities were previously previewed on CNN’s 60 Minutes, where OpenAI president Greg Brockman demonstrated Advanced Voice Mode with vision quizzing Anderson Cooper on his anatomical knowledge. During the demo, ChatGPT successfully recognized Cooper’s drawings on a blackboard and offered feedback on their accuracy. "The location is spot on,” the assistant said, in reference to a drawing of a brain. "The brain is right there in the head. As for the shape, it’s a good start. The brain is more of an oval.”

While the demonstration impressed, it also highlighted the AI’s potential for "hallucinations," as it made a mistake on a geometry problem. This serves as a reminder that even with its advanced capabilities, ChatGPT can sometimes generate inaccurate information.

The release of the visual component of Advanced Voice Mode has been plagued by delays. OpenAI initially announced the feature months before it was ready for public consumption, reportedly leading to multiple postponements. In April, the company promised a rollout "within a few weeks.” When Advanced Voice Mode was finally released in early fall, it lacked the visual component, forcing OpenAI to continue development on its video analysis capabilities. In the interim, focus had been on bringing the voice-only Advanced Voice Mode experience to additional platforms and users, particularly in the EU.

Alongside the Advanced Voice Mode with vision, OpenAI launched a festive "Santa Mode,” which adds a Santa Claus voice as a preset option. Users can access Santa Mode by tapping or clicking the snowflake icon next to the prompt bar in the ChatGPT app.

The launch of the long-awaited real-time video capabilities marks a significant step forward for ChatGPT, transforming it into a more versatile and interactive tool. The ability to understand and interact with both the physical and digital world positions ChatGPT as an even more powerful personal assistant, despite its known limitations.