Llama 4 Explained: Scout, Maverick, Behemoth & The Cool Tech

Big news from Meta! They just dropped the first models from their new Llama 4 lineup: Llama 4 Scout and Llama 4 Maverick. Here’s the lowdown on what makes them exciting.

So, What's All the Buzz About?

These new models are pretty special for a few key reasons:

- Open-Weight: They're Meta's first open-weight AI models. Being 'open-weight' is awesome because it means anyone can peek under the hood, see how the model works, tweak things, and share improvements – it really speeds up innovation for everybody and makes powerful AI more transparent!

- Mixture-of-Experts (MoE): They use a cool, efficient setup called MoE. Think of it like having a big team of specialists – the AI smartly calls on only the specific 'experts' it needs for a particular question. This saves a ton of energy and makes the whole process quicker and cheaper.

- Huge Memory (Context Window): Even better, they can remember way more stuff than before! This 'context window' is like the model's short-term memory. A bigger window (like Scout's massive one) lets it understand and work with much longer pieces of text or follow really complex conversations without losing track. It helps the AI get the bigger picture for smarter answers.

- Multimodal: They can handle different types of info (like text and images) right from the start.

All this makes the Llama 4 release a really big deal for everyone building cool things with AI! Meta's saying these new Llamas are perfect for developers who want to create AI experiences that feel more personal and smart.

On top of that, besides Scout and Maverick (which you can grab now), they also gave a sneak peek at Llama 4 Behemoth. It's a super-powerful "teacher" model that's still learning but already showing amazing results.

Meet the New Llama Crew

Llama 4 Scout: The Efficient All-Rounder

Scout is built for efficiency, packing 17 billion active smarts (parameters) across 16 'experts'. Meta says it's the top multimodal model in its league, doing better than older Llamas and even significantly outperforming rivals like Gemma 3 and Gemini 2.0 Flash-Lite on many standard tests.

Its large context window allows it to process extremely long documents or complex sequences of information – think summarizing entire research papers or analyzing huge chunks of code – which is a major advantage.

Plus, the fact that it can run effectively on just one NVIDIA H100 GPU is a game-changer, especially for smaller teams or individual devs. It makes top-tier AI much more accessible.

Llama 4 Maverick: The Performance Champ

Maverick also has 17 billion active parameters but uses way more experts (128 out of 400 billion total!). This one's the performance champ, apparently beating impressive models like GPT-4o and Gemini 2.0 Flash in several areas.

It's even holding its own against the much bigger DeepSeek v3 on tricky tasks like reasoning and coding, but using way less power to do it. So you're getting that top-tier performance without needing quite as much raw computing muscle – fantastic news for efficiency!

People testing the chat version gave Maverick a really high score (1417 ELO), suggesting it's not just smart but also really engaging and natural to talk to!

Good to know: Both Scout and Maverick learned a lot from the giant Llama 4 Behemoth – they were 'distilled' from it, benefiting from the hard work done by their bigger sibling.

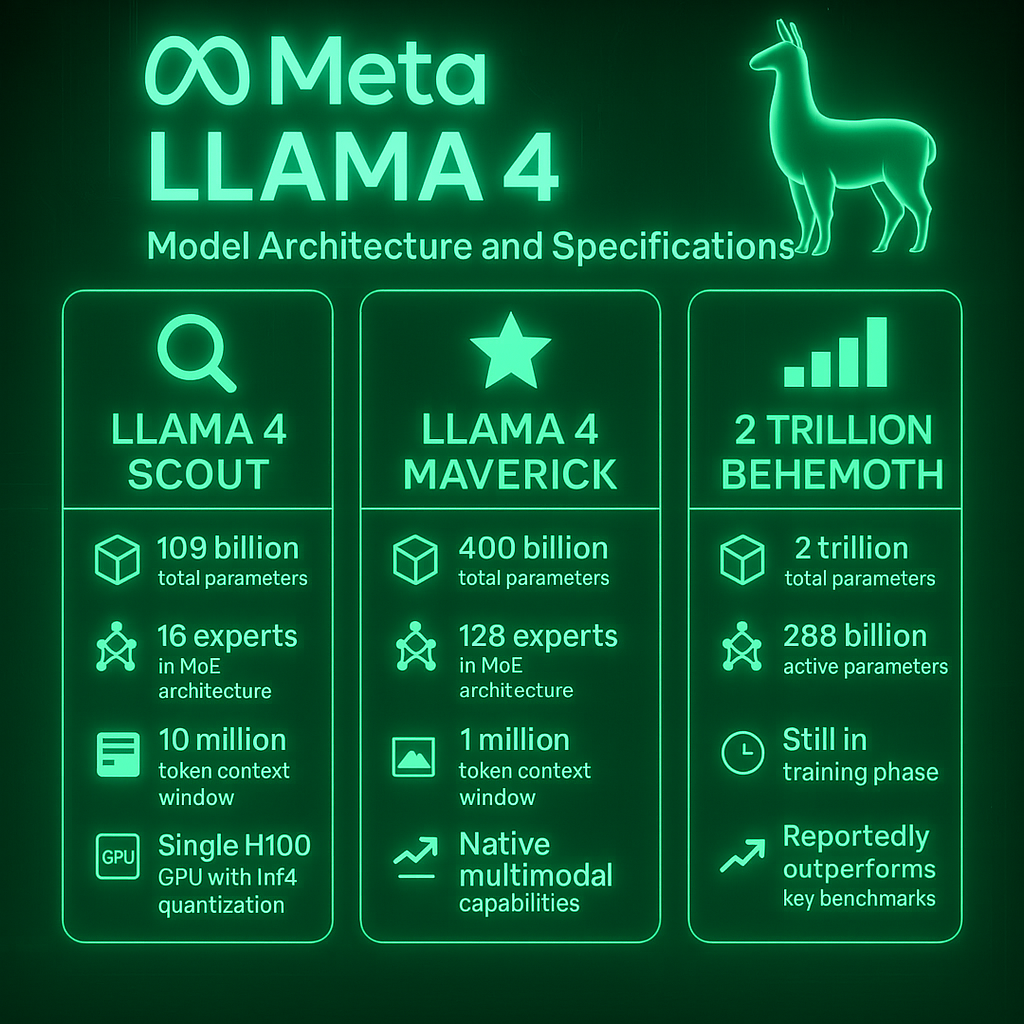

Llama 4 Models Comparison

Explore Meta's powerful new AI models with advanced capabilities like Mixture-of-Experts architecture, massive context windows, and multimodal processing.

Llama 4 Scout

Available Now-

Parameters Parameters determine the model's learning capacity. Active parameters are those used for each query, while total includes all available parameters.

Active: 17B Total: 109B109 billion total with 17 billion active parameters

-

MoE Architecture Mixture of Experts (MoE) architecture uses specialized sub-networks (experts) that are selectively activated for different inputs, improving efficiency.

16 experts in its Mixture of Experts design

-

Context Window The context window represents how much text the model can process at once, measured in tokens. A larger window allows for processing longer documents. (Max shown: 10M tokens)

Industry-leading 10 million token window (approx. 7,500+ pages)

-

Multimodal Capabilities

Supports text and image processing

-

Hardware Requirements

Fits on a single H100 GPU with Int4 quantization

-

Optimization

On-the-fly 4-bit or 8-bit quantization for accessibility

Llama 4 Maverick

Available Now-

Parameters Parameters determine the model's learning capacity. Active parameters are those used for each query, while total includes all available parameters.

Active: 17B Total: 400B400 billion total with 17 billion active parameters

-

MoE Architecture Mixture of Experts (MoE) architecture uses specialized sub-networks (experts) that are selectively activated for different inputs, improving efficiency.

128 experts in its Mixture of Experts design

-

Context Window The context window represents how much text the model can process at once, measured in tokens. A larger window allows for processing longer documents. (Max shown: 10M tokens)

1 million token context window

-

Multimodal Capabilities

Native support for processing text and images

-

Hardware Requirements

Fits on a single H100 host (e.g., Nvidia H100 DGX server)

Llama 4 Behemoth

Coming Soon-

Parameters Parameters determine the model's learning capacity. Active parameters are those used for each query, while total includes all available parameters.

Active: 288B Total: 2T2 trillion total with 288 billion active parameters

-

MoE Architecture Mixture of Experts (MoE) architecture uses specialized sub-networks (experts) that are selectively activated for different inputs, improving efficiency.

16 experts in its Mixture of Experts design

-

Context Window

Not specified (expected to be large)

-

Development Status

Still in training phase

-

Performance

Reportedly outperforms leading models on key benchmarks

-

Role

Acts as a teacher model for Scout and Maverick

| Features |

Scout |

Maverick |

Behemoth |

|---|---|---|---|

| Status | Available | Available | Coming Soon |

| Total Parameters | 109 billion | 400 billion | 2 trillion |

| Active Parameters | 17 billion | 17 billion | 288 billion |

| MoE Experts | 16 | 128 | 16 |

| Context Window | 10 million tokens | 1 million tokens | Not specified |

| Multimodal | Yes (Text/Image) | Yes (Native Text/Image) | Likely (Expected) |

| Hardware Target | Single H100 GPU | H100 DGX Server | Multiple GPUs (Cluster) |

| Quantization | 4-bit / 8-bit | Not specified | Not specified |

| Key Strength | Massive context window | Performance & multimodal | Raw power & teaching |

Behemoth: The Super-Smart Teacher

Okay, so Llama 4 Behemoth isn't out for download yet, but it's the powerhouse teaching the others. It's got a mind-boggling 288 billion active parameters (almost 2 trillion total – wow!). Meta says it's already outperforming big names like GPT-4.5 and Gemini 2.0 Pro on tough math and science tests, which really shows off its reasoning chops.

Think of it like Behemoth did the super-hard, advanced learning, and then shared its 'notes' and insights (in a super complex AI way, of course) with Scout and Maverick so they could get smart much faster. Having Behemoth as a teacher was absolutely key to making Scout and Maverick perform so well.

Cool Tech Powering Llama 4

So what makes these models tick? Let's recap the highlights:

- Mixture-of-Experts (MoE): The smart, efficient way to use only the needed AI brainpower for a task.

- Built for Multimodal: Learned using text, images, and video frames together for a more rounded understanding.

- Giant Memory (Context Window): Scout's 10 million token ability opens doors for analyzing huge amounts of info.

- Efficient Training: Clever tricks (like FP8 precision) used during training for maximum performance without wasting energy.

- Smarter Learning: Advanced techniques like reinforcement learning used after initial training to keep improving.

Open & Ready to Use!

True to their style, Meta is keeping things open. This commitment to open source means developers can start building all sorts of cool new apps, tools, or even just experiment right away.

You can download Llama 4 Scout and Maverick right now from llama.com and Hugging Face. They'll pop up on other cloud platforms and services soon too. Plus, you can already try out the new smarts powering Meta AI on WhatsApp, Messenger, Instagram, and the Meta.AI website.

Keeping Things Safe and Fair

Safety First

Meta knows safety is super important. They built safety checks into Llama 4 from the beginning and are giving developers handy tools like Llama Guard (to check inputs/outputs) and Prompt Guard (to spot malicious prompts) to help keep applications safe.

Tackling Bias

They also mentioned they've worked hard to make Llama 4 less biased, particularly on tricky social or political topics. The goal is for the AI to provide more balanced, neutral answers and understand different viewpoints – a big step forward.

What's Next for Llama?

Meta says this is just the start for the Llama 4 family. They want to make future Llamas even better at actually doingthings, chatting more naturally, and tackling really complex problems. Improving these areas could lead to some seriously helpful future AI.

We'll probably hear more about their vision at their LlamaCon event on April 29th!

The Bottom Line

This is genuinely exciting stuff! Meta's giving the AI world some powerful, flexible, and more accessible new tools. With the community able to build on these open models, it'll be awesome to see the creative and innovative things people come up with – from smarter assistants to entirely new ways to create art, music, or code.