HunyuanCustom: Tencent Releases Advanced Architecture for Custom AI Video

If you're following the AI video space, you know consistent, controllable personalized video generation has been the elusive goal. Today, Tencent's Hunyuan team introduced HunyuanCustom, an architecture designed specifically to tackle this challenge head-on.

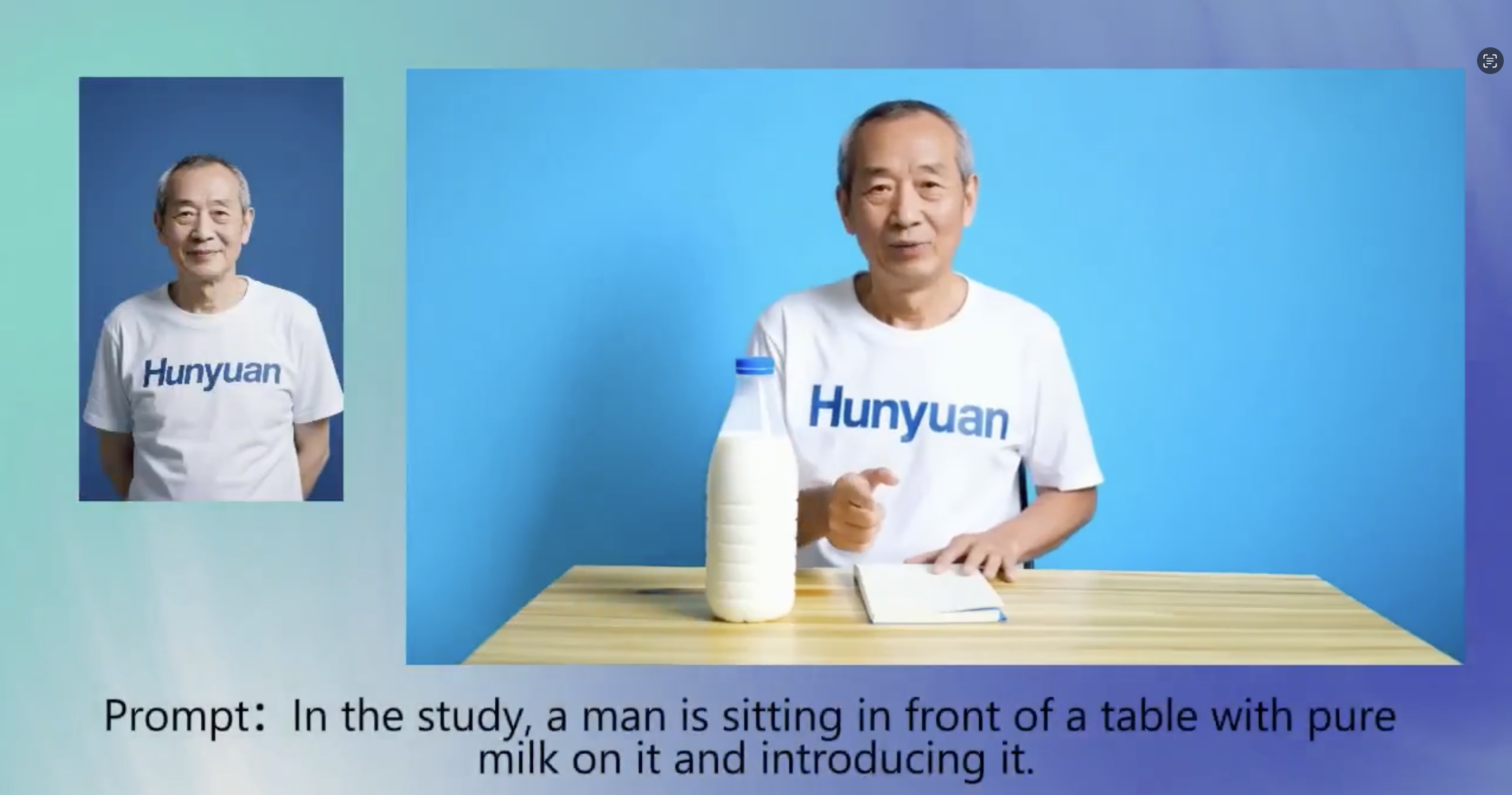

This isn't just another incremental update. HunyuanCustom represents a significant development in creating high-fidelity videos where specific subjects – people, characters, objects – maintain their identity across different actions and scenes.

Here's the Summary of It

The core problem HunyuanCustom addresses is subject consistency. Previous models often struggled to keep a specific person looking like that exact person when generating new video sequences. HunyuanCustom leverages its underlying HunyuanVideo-13B model and a multimodal approach to maintain that identity, whether for a single subject or multiple subjects interacting within a scene.

According to the development team, the model's performance is noteworthy, reportedly outperforming existing open-source alternatives and holding its own against leading closed-source platforms in terms of detail, motion smoothness, and lighting realism.

Key Capabilities Announced

HunyuanCustom isn't limited to simple text-to-video. Its strength lies in leveraging multiple input types for fine-grained control:

- Single-Subject Transformation: Provide an image of a subject and a text prompt describing an action (e.g., "Image of Person A" + "Text: riding a bicycle"). The system generates a video of Person A performing that action, potentially in new attire or environments, while preserving their core identity.

- Multi-Subject Composition: Input separate images of multiple subjects to generate scenes featuring them together (e.g., "Image of Person B" + "Image of Person C" + "Text: having a meeting").

- Audio-Driven Synthesis: Synchronize video output with an audio track, enabling applications like creating talking avatars from static images or animating characters to sing. This has clear implications for digital assistants, virtual interfaces, and creative content.

- Video-Based Subject Integration: The architecture allows for inserting or replacing subjects within existing video footage, offering new possibilities for post-production and creative video editing.

Availability and Access

Crucially, Tencent has open-sourced the single-subject video generation capability. Developers and researchers can access and build upon this core technology. A demonstration is also live on the Hunyuan website for direct testing. Further features of the HunyuanCustom architecture are slated for future release.

For Deeper Exploration:

Project: https://hunyuancustom.github.io

Technical Details: https://arxiv.org/pdf/2505.04512

Code: https://github.com/Tencent/HunyuanCustom

The Takeaway

HunyuanCustom marks a notable advancement in the quest for controllable, high-quality personalized video generation. By tackling subject consistency and offering multimodal control, while also open-sourcing a key component, this release provides significant new tools and potential for developers, researchers, and creators in the AI field. Its real-world impact will depend on how the community utilizes and builds upon this foundation.