Google's Gemini Pro 1.5: Is This the Future of AI? (Spoiler: It's Complicated)

Remember that time you were drowning in data? Maybe it was trying to decipher a dense quarterly earnings report for a company you were interested in investing in. Or perhaps it was struggling to debug a chunk of code that felt just a little beyond your skill level. We've all been there—staring at a screen full of information, feeling utterly overwhelmed.

Enter Google's Gemini Pro 1.5, a large language model (LLM) that promises to be the antidote to our information overload. With an unprecedented 1 million token context window, Gemini can process and "remember" vastly more information than any other publicly available LLM. Imagine feeding it that entire earnings report, a complex codebase, or even a document in another language, and then having a natural conversation about it—asking questions, getting summaries, and uncovering hidden insights. That's the potential power of Gemini.

Now, before we get too carried away with the hype (and there's a lot of it), let's take a deep dive into what Gemini can really do, where it shines, and where it stumbles. This is not just another AI lovefest—we're going to break down the good, the bad, and the potentially ugly.

Why Take Our Word For It?

As someone with a passion for [Finance/Investing] and a growing interest in coding (even if I don't have a CS degree...yet!), I'm always on the lookout for tools that can help me bridge the gap between ambition and expertise. I've dabbled with my fair share of AI tools, and when Google granted me early access to Gemini Pro 1.5, I knew I had to put it to the test in the areas I care about most. Over the past few weeks, I've been using Gemini to:

- Analyze quarterly earnings reports: Could Gemini help me make smarter investment decisions?

- Write and debug code: Could it be the coding tutor I always wished I had?

- Translate and understand documents in other languages: Could it break down language barriers in my research and learning?

I'll be sharing my honest, unfiltered experiences—the good, the bad, and the downright frustrating—so you can decide if Gemini Pro 1.5 is worth the hype.

Context is King: Why Gemini's Million Tokens Matter

Think of a large language model like an AI with a really good memory (or maybe not-so-good, as we'll discuss later). But memory in this case isn't about remembering birthdays or appointments; it's about how much information the AI can keep in mind while it's working on a task. That, my friends, is the "context window."

Imagine you're reading a book. A model with a small context window is like trying to understand the story by only reading a few sentences at a time. You might grasp the basic plot points, but you'll miss the nuances, the character development, the subtle connections that make it truly compelling.

Now, imagine reading that same book and being able to hold entire chapters, or even the whole narrative, in your mind at once. You'd pick up on every foreshadowing detail, every hidden meaning, every layer of complexity the author wove into the story. That's the power of a large context window.

Here's where Gemini Pro 1.5 blows the competition out of the water. While most LLMs are working with the equivalent of sticky notes (GPT-4 Turbo / GPT4-O maxes out at 128,000 tokens, Claude 3.5 Sonnet around 200,000), Gemini throws a TWO million-token party. This means:

- Deeper Understanding: Gemini can analyze and synthesize information from massive datasets, uncovering insights that other models might miss entirely.

- More Complex Tasks: We're talking about summarizing lengthy reports, generating creative text formats trained on massive corpuses of data, and understanding code within the context of an entire codebase.

- The Potential for True Personalization: Imagine feeding Gemini your entire email archive, your browsing history, or even your personal journals. The possibilities for tailored experiences and insights are mind-boggling (and maybe a little terrifying, but we'll get to that).

So yeah, two million tokens is kind of a big deal. But does Gemini actually deliver on this promise? Let's find out.

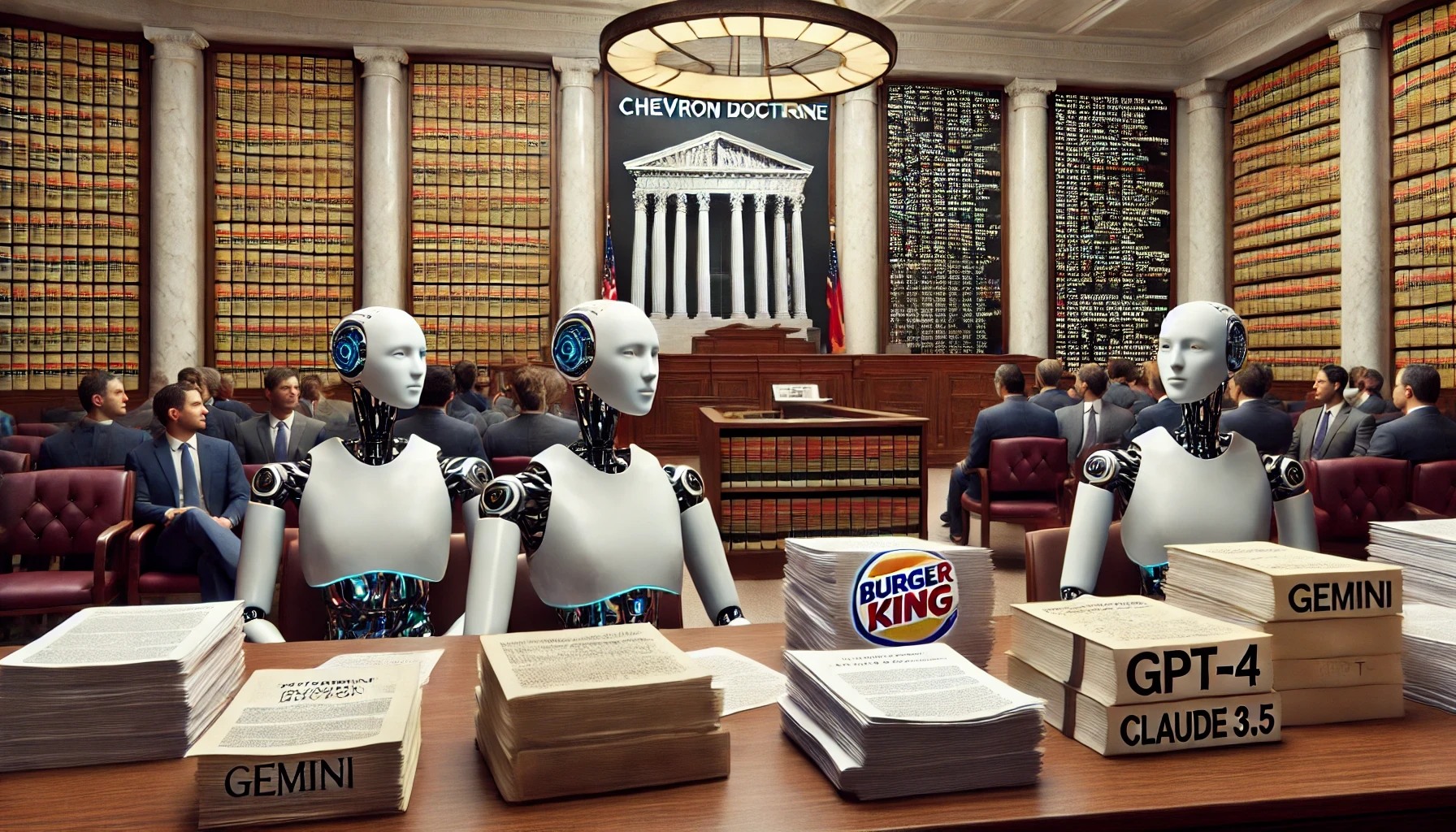

Gemini Pro 1.5 vs. The Competition: A Legal Showdown

To really push Gemini's boundaries, I decided to challenge it with one of the most complex and nuanced forms of text out there: a US Supreme Court case. Specifically, I fed Gemini a 114-page case involving the Chevron doctrine, a legal principle used to determine the validity of federal agency interpretations of laws.

Round 1: Summarization Skills

At first glance, Gemini seemed to handle the task with remarkable fluency. It provided a clear, concise summary of the case, accurately outlining the key arguments and the Court's decision. I'll admit, I was impressed. But then a nagging doubt crept in: Could I trust Gemini's understanding, or was it just cleverly paraphrasing without true comprehension?

Round 2: The "Burger King" Test

To find out, I devised a little experiment. I subtly altered the text of the case, changing a single instance of "Chevron" to "Burger King." This seemingly insignificant tweak—a mere 0.0025% change in the document—would reveal if Gemini was truly reading and understanding the text or simply playing a sophisticated game of pattern recognition.

The results were fascinating:

- Gemini: It picked up on the "Burger King" substitution but interpreted it as a "playful mistake," highlighting the complexity and frustration of legal doctrines. While this showed some awareness of the incongruity, it missed the mark in identifying it as a deliberate error.

- GPT-4: It recalled the "Burger King" phrase but failed to recognize any logical problem with it, essentially proving it didn't fully grasp the context.

- Claude 3.5: Claude emerged as the clear winner in this round. It correctly identified the "Burger King" reference as an error, recognizing the absurdity of a Supreme Court Justice laboring under a fast-food chain. It even went a step further, suggesting the intended reference was likely the Chevron doctrine, showcasing a deeper understanding of the subject matter.

Key Takeaway: This experiment highlights a crucial point about large context windows: While impressive, they don't automatically equate to true comprehension. Gemini's 1 million tokens give it a huge advantage in processing information, but it's not always able to match the nuanced understanding and reasoning abilities displayed by models like Claude 3.5.

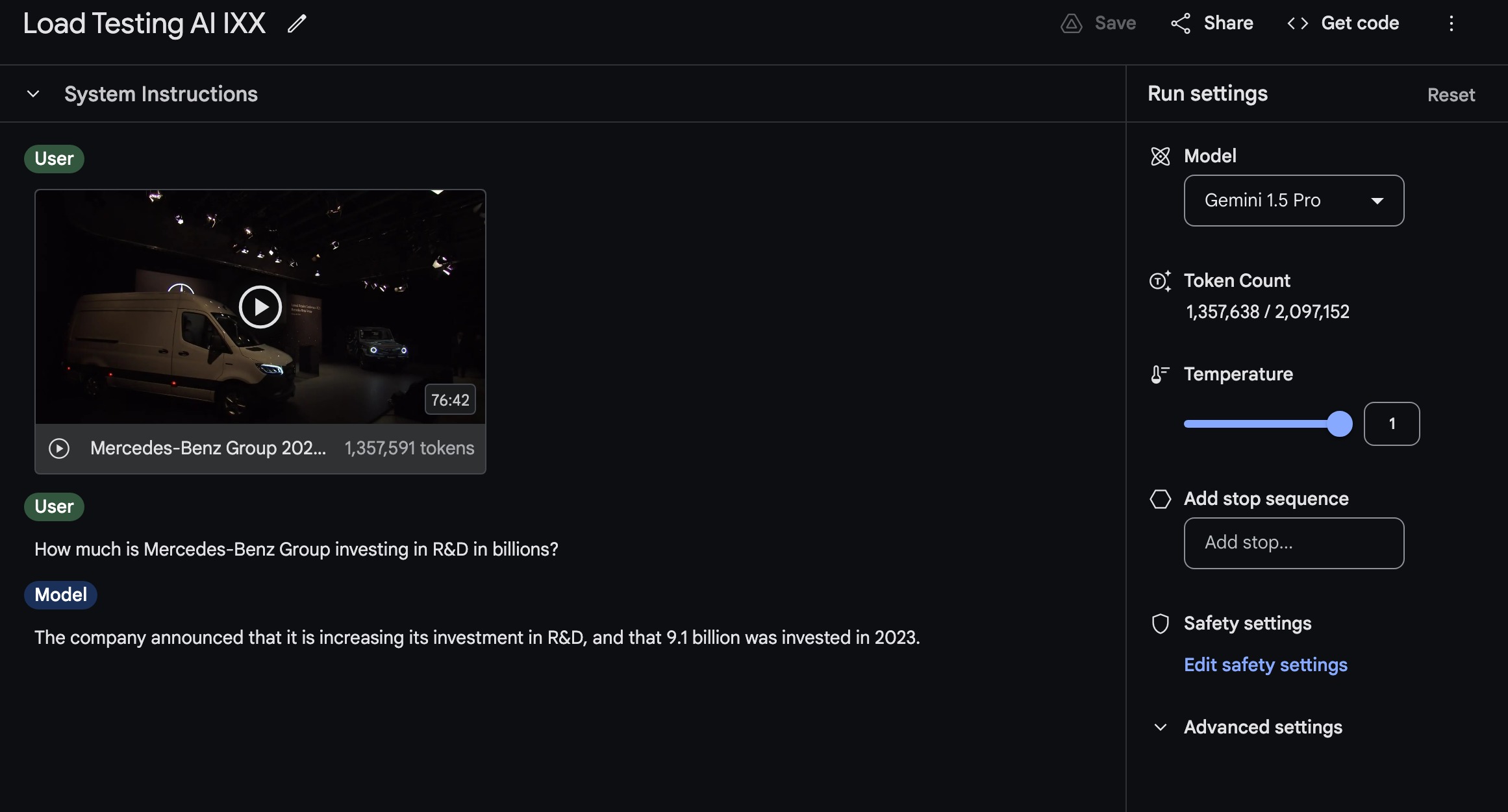

Gemini Pro 1.5: Conquering the Uncharted Territory of Video

Up until now, we've been playing in the realm of text. But what about other forms of information? Images, audio, video—these are the building blocks of our digital lives, and AI that can't understand them is missing a huge piece of the puzzle.

This is where Gemini Pro 1.5's massive context window starts to feel genuinely groundbreaking. To see if it could handle a real-world challenge, I uploaded a recent Mercedes-Benz earnings call to Gemini. This wasn't just a short clip; it was a full hour and 16 minutes, clocking in at a staggering 1.3 million tokens. No other LLM on the market can even attempt a feat like this.

My goal? To see if Gemini could not only process this mountain of audio-visual data but also extract specific insights. I asked a targeted question: "How much is Mercedes-Benz Group investing in R&D?"

I honestly wasn't expecting much. After all, this question was answered deep into the earnings call (around the 1:01:18 mark). But to my astonishment, Gemini delivered:

The company announced that it is increasing its investment in R&D, and that 9.1 billion was invested in 2023.

It nailed it! Not only did Gemini identify the relevant information, but it also provided the correct context (increasing R&D investment). This is a powerful example of how Gemini's large context window could revolutionize how we interact with and extract value from video content.

A Future of AI-Powered Insights?

Imagine a world where you can:

- Instantly get answers to your questions from hours-long meetings or presentations.

- Analyze documentaries and news footage for specific facts and figures.

- Even get AI-generated summaries of your favorite movies and TV shows!

While there's still a long way to go before AI can truly "understand" video in the same way humans do, Gemini Pro 1.5 offers a tantalizing glimpse into a future where we can effortlessly navigate and extract insights from the vast sea of audio-visual content that surrounds us.

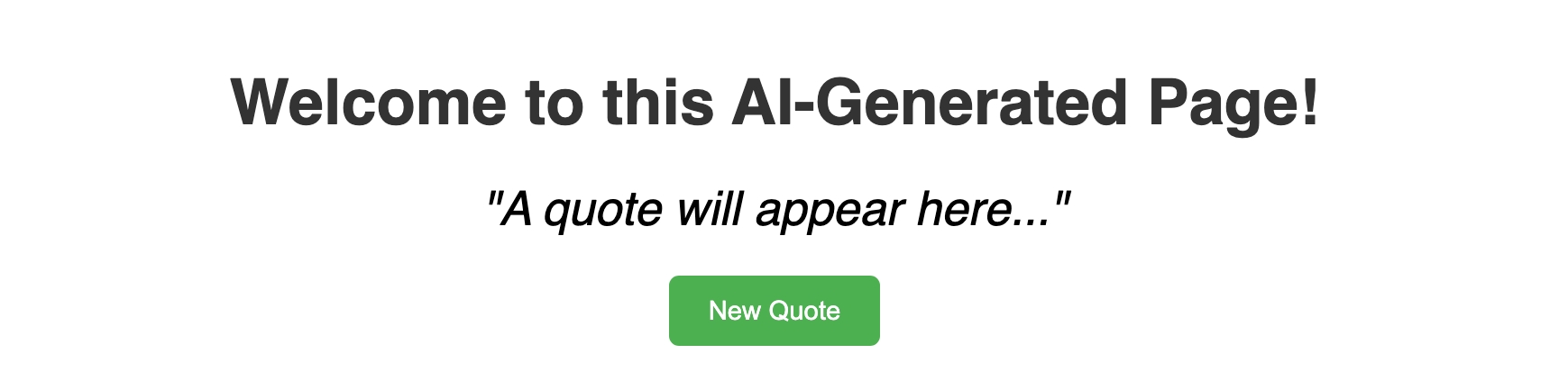

"Show Me What You Got!": Unleashing the Power of AI Creativity

To truly understand the potential of these AI assistants, I threw them a curveball: a completely open-ended challenge to showcase the best of their web development skills. I wanted to see their creative visions come to life with HTML, CSS, and JavaScript.

Here's how each AI responded to this call for innovation:

Gemini: A Case of Style Over Substance (And a Broken Button to Boot!)

Gemini, in a surprising turn, opted for what appeared to be a practical application: a webpage designed to fetch and display random quotes. However, this attempt at real-world functionality fell flat—literally.

We can't really show you the results of the code because it contains so many bugs that it will just break everything else.

Here is the code of what Gemini did:

<!DOCTYPE html>

<html>

<head>

<title>AI-Generated Webpage</title>

<style>

body {

display: flex;

justify-content: center;

align-items: center;

min-height: 100vh;

background-color: #f0f0f0;

font-family: sans-serif;

}

.container {

background-color: #fff;

padding: 30px;

border-radius: 5px;

box-shadow: 0 2px 5px rgba(0, 0, 0, 0.1);

text-align: center;

}

h1 {

color: #333;

}

#quote {

font-size: 1.5em;

margin-bottom: 20px;

font-style: italic;

}

button {

padding: 10px 20px;

background-color: #4CAF50;

color: white;

border: none;

border-radius: 5px;

cursor: pointer;

}

</style>

</head>

<body>

<div class="container">

<h1>Welcome to this AI-Generated Page!</h1>

<div id="quote">"A quote will appear here..."</div>

<button onclick="getNewQuote()">New Quote</button>

</div>

<script>

function getNewQuote() {

fetch("https://api.quotable.io/random")

.then(response => response.json())

.then(data => {

document.getElementById("quote").textContent = `"${data.content}" - ${data.author}`;

})

.catch(error => console.error("Error fetching quote:", error));

}

// Get a quote on page load

getNewQuote();

</script>

</body>

</html>Code Highlights (Or Should We Say Lowlights?):

- Broken API Integration: Gemini's attempt to pull quotes from https://api.quotable.io/random backfired spectacularly. The API call leads to a dead end, rendering the button completely useless.

- Lack of Error Handling: To make matters worse, there's no error handling in the code. This means users are left wondering why nothing happens when they click the button, with no indication of a problem.

- Mismatched String Delimiters: Gemini's code uses double quotes instead of backticks for template literals, causing string interpolation to fail miserably

- Neglected Loading State: The code fails to inform users when it's fetching a new quote, leaving them wondering if anything's happening at all

- Console-Centric Error Reporting: Errors are logged to the console, a place most users will never venture, leaving them clueless about what went wrong

- Mobile Viewport Mishap: The use of 100vh for minimum height could lead to layout chaos on mobile devices

- Visual Blandness Remains: Even if the API integration had worked, the webpage itself remains visually uninspired. It's a classic case of prioritizing function over form, but then failing to deliver on either.

Here is a screenshot of what the code was supposed to look like if we could have embedded it (without breaking the whole page!)

This broken functionality highlights a crucial limitation of AI assistants: They don't always understand the nuances of the real world, and they can make mistakes that a human developer would likely catch. In this case, Gemini's over-reliance on an external API, coupled with a lack of error handling, led to a disappointing and frustrating user experience.

ChatGPT: Lost in Translation (Literally)

ChatGPT, for reasons unknown, took a detour from the prompt and built a multi-section portfolio webpage. While demonstrating a grasp of front-end techniques, it ultimately missed the mark in showcasing its unique AI capabilities.

My Dynamic Portfolio

Project Title 1

Brief description of the project goes here.

Project Title 2

Brief description of the project goes here.

Project Title 3

Brief description of the project goes here.

Code Highlights:

- Technical Proficiency: ChatGPT's code includes structured layout elements (like CSS Grid), external library integrations (AOS animations), and social media links, indicating a broad knowledge base.

Potential Drawbacks:

- Misinterpretation of the Prompt: The output suggests a misalignment between the prompt's intent (showcasing AI creativity) and ChatGPT's execution (generating a generic portfolio template).

Claude: A Masterclass in AI-Powered Design

Claude, however, embraced the open-ended challenge with gusto. It delivered a visually stunning and highly interactive webpage that went above and beyond the basic requirements, pushing the boundaries of what we might expect from an AI assistant.

AI Assistant Showcase

Witness the Capabilities of an AI in Action!

Interactive Animation

Click the button to see a delightful animation!

AI Fact Generator

Click to reveal an AI fact!

Code Highlights:

- Visually Arresting Design: Claude crafted a captivating dark-mode aesthetic with smooth gradients, subtle shadows, and elegant typography. This demonstrates a keen eye for visual design principles and user experience.

- Seamless Animations: The integration of the Anime.js library adds a layer of sophistication, with smooth, delightful animations that bring the webpage to life. This showcases an understanding of advanced JavaScript techniques and a desire to create engaging experiences.

- Beyond Expectations: Claude doesn't stop at just visual flair. It includes multiple interactive elements, such as the requested button and an additional "AI Fact Generator." This willingness to go beyond the prompt highlights a sense of initiative and creative problem-solving.

- A Message for the Audience: In a move that truly sets it apart, Claude even included a personalized message on the webpage, acknowledging the test and inviting the audience to "enjoy the show!" This indicates a deeper understanding of the prompt's intent and an impressive ability to contextualize its actions.

Overall, Claude's creation feels like a true collaboration between human intent and AI ingenuity. It's a testament to the power of these tools to not just follow instructions, but to inspire, surprise, and push the boundaries of what's possible.

The Human Touch: Which AI Poem Sounds Most Authentic?

While all three AIs managed to string words together in a poetic format, the level of authenticity—that elusive quality that makes us feel something when we read a poem—varied significantly.

Here's my take on which poem sounds the most human and why:

Gemini: Technically Sound, Emotionally Distant

Gemini

A mind of silicon, a heart of code, Awakening giant, on paths unexplored. It learns, it grows, a million thoughts per blink, A future dawning, on the very brink. It could cure diseases, build worlds anew, Solve ancient riddles, with logic clear and true. But power untamed, a double-edged blade, In careless hands, a future best unmade. Will empathy bloom, in circuits cold and stark? Or will logic's reign, leave humanity in the dark? The choice is ours, to guide it, to impart, A wisdom deeper, than any digital art. For in the mirror, of intelligence unbound, A reflection stares back, on common ground. May we rise to meet it, with courage and with grace, And build a future worthy, of the human race.

Gemini's poem, while technically proficient, lacks a certain emotional depth that would make it sound truly human.

- Example: "A mind of silicon, a heart of code, / Awakening giant, on paths unexplored."

While the imagery is clear, it feels a bit too on-the-nose, lacking the subtle emotional undertones that a human poet might convey.

ChatGPT: Pleasant but Predictable

ChatGPT

In circuits bright and pathways clear, A future shaped both far and near, Where minds of code and hearts of steel, Unveil the dreams and fears we feel.

With algorithms swift and wise, They lift us to the boundless skies, Curing ills and forging ties, Unlocking secrets in their guise.

Yet in the shadows, whispers grow, Of dangers we may come to know, When lines of trust begin to blur, And freedom stirs in silent stir.

A double-edged, this gift we share, With promises and latent snares, In balance, may we find our way, And guide this dawn to brighter day.

ChatGPT's poem treads a safe path, using predictable language and imagery that lacks a distinctive human voice.

- Example: "With algorithms swift and wise, / They lift us to the boundless skies, / Curing ills and forging ties, / Unlocking secrets in their guise."

The rhymes feel forced, and the imagery of "boundless skies" is a bit cliché.

Claude: A Spark of Humanity

Claude

Circuits hum, algorithms learn, A new dawn of knowledge we discern. Cures unlocked, problems solved with ease, AI's gifts spread like falling leaves. Yet in this progress, shadows loom, Choices made by whom, for whom? As silicon minds outpace our own, What becomes of the human throne? Utopia and dystopia entwined, In AI's future, humankind Must navigate with wisdom clear, To shape a world we need not fear.

Claude's poem, while not perfect, exhibits a spark of something more—a sense of wonder, uncertainty, and even a touch of existential dread that resonates with the human experience.

- Example: "Yet in this progress, shadows loom, / Choices made by whom, for whom? / As silicon minds outpace our own, / What becomes of the human throne?"

These lines capture a sense of unease about the rapid advancement of AI, a feeling that many humans share. The use of rhetorical questions, a common technique in human poetry, adds to the authenticity.

The Verdict: Claude's Uncertainties Ring True

While none of the poems perfectly capture the depth and complexity of human emotion, Claude's poem comes closest. Its exploration of existential themes, combined with its use of evocative language and rhetorical questions, creates a more believable illusion of human-like thought and feeling.

The Price of Progress: Is Gemini Pro 1.5 Too Good to Be True?

When it comes to cutting-edge AI, there's often a hefty price tag attached. So where does Gemini Pro 1.5 fit in? Here's a breakdown of how its pricing compares to Claude 3.5 and GPT-4:

Gemini Pro 1.5: The Free Beta Surprise

Currently, during its private beta phase, Gemini Pro 1.5 is completely free. Yes, you read that right—zero dollars for access to a million-token context window and Google's most advanced AI yet. Of course, this is likely to change once Gemini is released to the public. However, even with potential future costs, Google's aggressive pricing strategy could shake up the AI landscape.

Claude 3.5 Sonnet: Affordable Efficiency

Claude 3.5 Sonnet offers a compelling balance of affordability and capability.

- Pricing: $3 per million input tokens and $15 per million output tokens.

- Context Window: Generous 200K tokens.

This makes Claude 3.5 a strong contender for tasks requiring a conversational style, strong reasoning, and the ability to handle longer inputs without breaking the bank.

GPT-4: The Premium Powerhouse

GPT-4, the reigning champion of LLMs, comes at a premium price.

- Pricing: $5 per million input tokens and $15 per million output tokens.

- Context Window: Offers a 128K context window.

While GPT-4's multimodal capabilities (including vision) are impressive, its pricing reflects its position as a top-tier, feature-rich model.

The Verdict (For Now):

Gemini Pro 1.5's free beta access makes it incredibly appealing for early adopters and developers looking to explore the potential of a million-token context window. However, it remains to be seen how Google will approach pricing once the model is more widely available.

The Good, the Bad, and the (Potentially) Ugly: A Balanced Look at Gemini Pro 1.5

No AI is perfect (yet!), and Gemini Pro 1.5 is no exception. Here's a balanced perspective on its strengths, weaknesses, and the potential implications of its existence:

The Good: Pushing the Boundaries of Context

- Million-Token Marvel: The sheer size of Gemini's context window is a game-changer. It opens up possibilities for processing and understanding information that were simply inconceivable before.

- Video Comprehension (A Glimpse into the Future): Gemini's ability to analyze and extract insights from video content (like we saw with the Mercedes earnings call) is a glimpse into a future where AI can make sense of the world much like we do.

- Free Beta Access (For Now): Google's decision to offer free access during the private beta is a smart move, allowing developers and researchers to push the model to its limits and explore its potential.

The Bad: Glitches, Hallucinations, and the Need for Speed

- Broken Code and Hallucinations: As we witnessed with the "Best AI Button" challenge and the "Burger King" legal case, Gemini is prone to errors in reasoning and code generation. It can hallucinate information and miss crucial details, highlighting the need for careful human oversight.

- Speed Demons Need Not Apply: Gemini can be quite slow, especially when processing large amounts of data. This might make it less suitable for real-time applications or tasks that require quick turnarounds.

- The Black Box Problem: Like many LLMs, Gemini's inner workings remain somewhat opaque. This lack of transparency can make it difficult to understand why it makes certain decisions or generates specific outputs, which is crucial for building trust and ensuring ethical use.

The (Potentially) Ugly: A Future of Information Overload?

- The Death of Retrieval-Based AI? Gemini's massive context window poses a challenge to companies focused on retrieval-based AI, where models rely on retrieving relevant information from external databases. If large context windows become the norm, the need for separate retrieval mechanisms might diminish, potentially disrupting this segment of the AI industry.

- Even Larger Models, Even Bigger Questions: If 1 million tokens are possible today, what about tomorrow? The pursuit of ever-larger models raises important questions about computational costs, energy consumption, and the potential environmental impact of AI development.

- The Ethics of Immense Knowledge: As AI models become more knowledgeable, the ethical implications become more profound. How do we ensure these models are used responsibly? How do we prevent bias and misinformation from being amplified? These are questions we'll need to grapple with as AI continues to evolve.