Fine-Tuning Large Language Models (LLMs)

Simple Definition

Fine-tuning a large language model means teaching it new things! Imagine the model is a high school student who knows a lot about many topics. Fine-tuning is like a university student specializing in one topic to become good at answering questions or solving problems in that area.

Technical Definition

Fine-tuning a large language model (LLM) involves taking a pre-trained model and continuing its training on a new, smaller dataset specific to a particular task or domain. During fine-tuning, the model's weights are adjusted using the new dataset, allowing it to adapt its knowledge and behavior to the desired application. This process is distinct from training a model from scratch and leverages the general knowledge learned during pre-training.

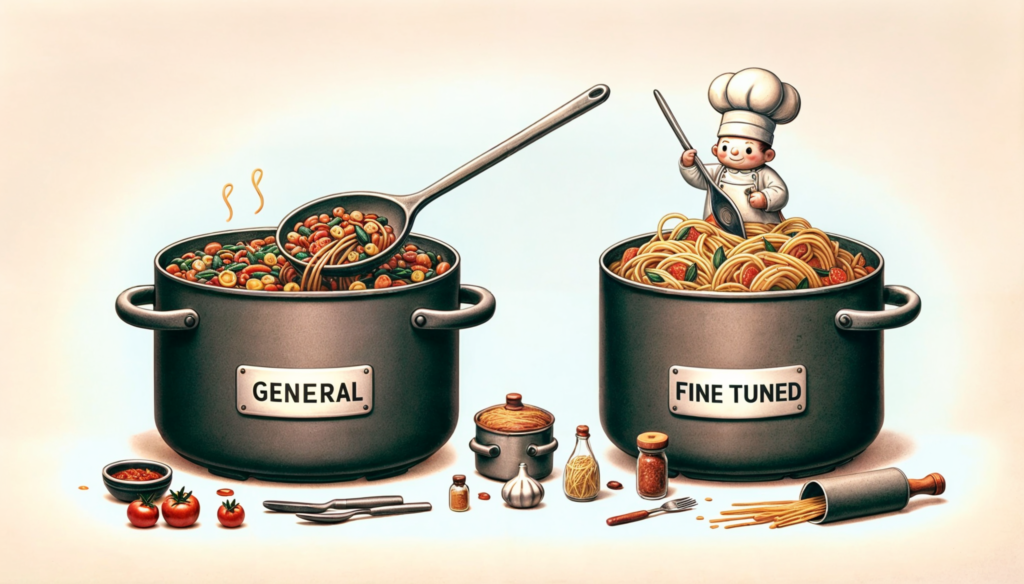

Definition with a Metaphor

Think of an LLM as a versatile chef who knows how to cook various dishes. Fine-tuning is like giving the chef a specialized cookbook about a specific cuisine (say, Italian). After studying the cookbook, the chef becomes an expert in Italian cuisine while still retaining their ability to cook other dishes. The chef can now create unique Italian recipes that are more accurate and refined than before.

Discover how fine-tuning improves clarity, accuracy, and relevance by comparing a model's responses in this Before-and-After challenge.

🎮 Fine-Tuning Adventure: Before-and-After Challenge

Embark on a thrilling adventure to compare how fine-tuning improves the model's responses! Choose the "Before" and "After" options for each question and discover the power of fine-tuning. 💪

🔍 Identify the Improvements

Can you spot the difference? Select all improvements made in the "After" responses. 🤔

Why Fine-Tuning Matters 🌟

Fine-tuning a large language model (LLM) involves training it further on a specific dataset to tailor its responses to particular use cases. It's crucial because:

- 🎨 Customization: Adapts the model to specific domains (e.g., programming, medical).

- 🎯 Accuracy: Improves the quality and relevance of responses.

- ⚡ Efficiency: Reduces the need for extensive prompt engineering.

How to Choose a Dataset for Fine-Tuning?

When selecting a dataset for fine-tuning your LLM, consider the following factors:

- Choose a general knowledge dataset when you want your LLM to have a broad understanding of various topics and be able to engage in general conversations.

- Examples include Wikipedia, Common Crawl, or BookCorpus, which cover various subjects and writing styles.

- Fine-tuning on a general knowledge dataset suits tasks such as question answering, text completion, and general-purpose chatbots.

- Choose a general knowledge dataset when you want your LLM to have a broad understanding of various topics and be able to engage in general conversations.

- Examples include Wikipedia, Common Crawl, or BookCorpus, which cover various subjects and writing styles.

- Fine-tuning on a general knowledge dataset suits tasks such as question answering, text completion, and general-purpose chatbots.

- Opt for a domain-specific dataset when you need your LLM to excel in a particular field or industry, such as medical, legal, or financial domains.

- Domain-specific datasets contain specialized vocabulary, jargon, and knowledge unique to the target domain.

- Fine-tuning on a domain-specific dataset is ideal for applications like medical diagnosis assistants, legal document analysis, or financial forecasting tools.

- Opt for a domain-specific dataset when you need your LLM to excel in a particular field or industry, such as medical, legal, or financial domains.

- Domain-specific datasets contain specialized vocabulary, jargon, and knowledge unique to the target domain.

- Fine-tuning on a domain-specific dataset is ideal for applications like medical diagnosis assistants, legal document analysis, or financial forecasting tools.

- Consider using a mixed dataset that combines general knowledge and domain-specific data when you want your LLM to have a strong foundation in general topics while possessing expertise in a specific area.

- A mixed dataset allows the model to maintain its broad knowledge base while adapting to the nuances and intricacies of the target domain.

- Fine-tuning on a mixed dataset suits applications that balance general conversational abilities and domain-specific expertise, such as customer support chatbots or virtual assistants for niche industries.

- Consider using a mixed dataset that combines general knowledge and domain-specific data when you want your LLM to have a strong foundation in general topics while possessing expertise in a specific area.

- A mixed dataset allows the model to maintain its broad knowledge base while adapting to the nuances and intricacies of the target domain.

- Fine-tuning on a mixed dataset suits applications that balance general conversational abilities and domain-specific expertise, such as customer support chatbots or virtual assistants for niche industries.

Test what you've learned with the Mystery Dataset Detective challenge

🕵️♂️ Mystery Dataset Detective

Welcome, detective! Your mission is to solve the mystery by choosing the right dataset to fine-tune a base model for a specific problem. Select a problem, then pick the best dataset to train the model. Next, check the model's reply to see if it's on the right track!

Choose a problem to solve.

Expert Q&A

Q1: Why is fine-tuning important for LLMs?

A: Fine-tuning is critical because it allows a general-purpose model to specialize in specific tasks. For example, an LLM pre-trained on a broad dataset might know a bit about medical concepts, but fine-tuning with a medical-specific dataset can help the model produce accurate and relevant medical advice. This specialization improves performance, relevance, and accuracy in specific domains.

Q2: What are the critical steps in fine-tuning an LLM?

A: The key steps are:

Q3: Are there any challenges in fine-tuning LLMs?

A: Yes, some challenges include: